The Cell processor is probably the most mythical chip in console history. Many say the Cell had buckets of untapped potential, citing complex hardware design as the primary reason the system was never fully tapped. But do these claims hold water in any scenarios, practical or not? The truth about the chip isn't as straightforward as a set of statistics or a benchmark. These claims are partially correct, in fact; the PS3 was more capable than it showed in any games that were produced for the system. The issue runs deeper than difficulty with the design, however.

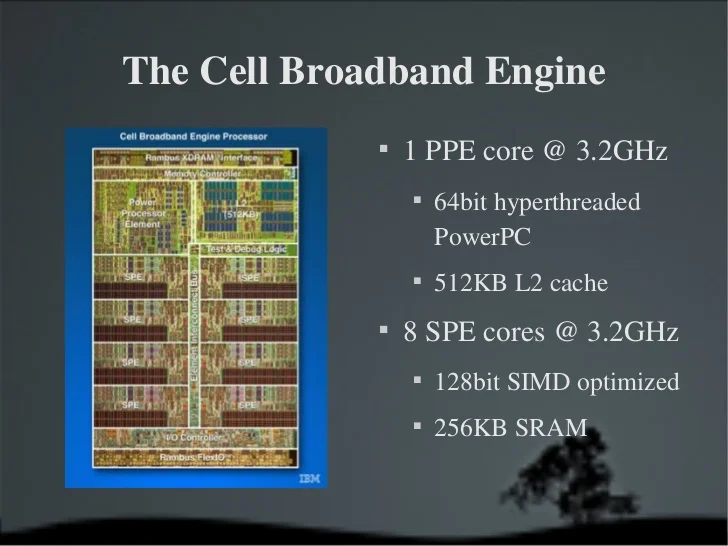

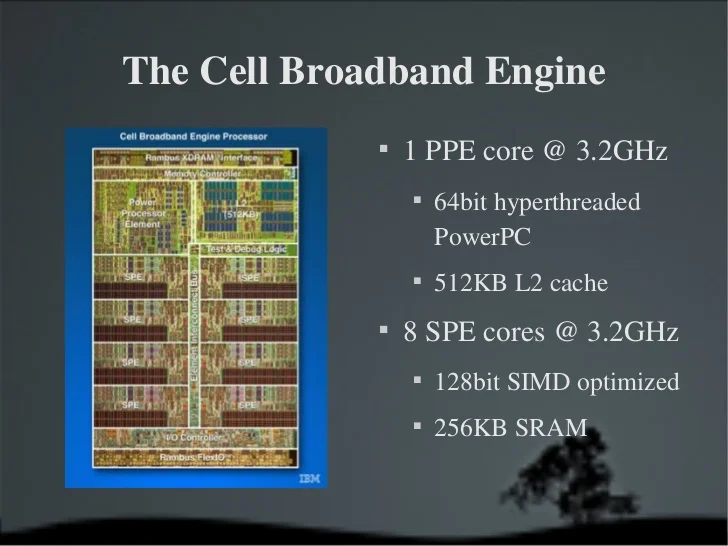

Let's get to grips with what the Cell really consists of before we get into the problems. The Cell consisted of one 64-bit, dual-threaded 3.2GHz PowerPC core (the "PPE", or Power Processing Element) and 8 SPEs (Synergistic Processing Elements) - of which 7 are available in-game. Some people misconstrue these SPEs as 8 cores, but this is a misconception - I'll explain why below. The SPEs are optimised for SIMD operations (Single instruction, multiple data - self-explanatory) and have 256KB of local store/SRAM. This doesn't sound so bad; what's the issue?

Well, the SPEs are fatally flawed in a few vital ways. The SPEs are able to talk to each other, but they do not have branch prediction - in other words, self-modifying, changing or "branching" code (such as common "if", "then" or "else" statements) is very problematic, as the PPE has to babysit the SPEs when branching code is in effect. This puts a lot of strain on the single PPC core because along with sending instructions to the GPU and likely doing work itself, it has to manage 7 active SPE units. The Cell is designed to compensate for this with compiler assistance, in which prepare-to-branch instructions are created, but even then it's not a perfect solution because

Let's suppose this isn't an issue and you have very efficient PPE code that cleanly manages this process - time to deal with the next big issue with the Cell: the pipeline. The Cell has a 23-stage pipeline, between the PPE and the SPEs; this is obviously quite a long pipeline to go through every instruction. This problem is exasperated by the fact that while the PPE has some very limited out-of-order execution capabilities (the ability to execute load instructions out-of-order), the SPEs are strictly in-order - that is to say, instead of being able to execute instructions without any fixed order, every instruction must be individually processed in-order. A long, in-order pipeline is a further expense to performance.

So let's assume you have written optimised code that minimises branching operations and has the PPE efficiently babysitting the SPEs. Surely that's not unreasonable?

Still not the end of the problems, unfortunately. The SPEs only have 256KB SRAM each dedicated to them, so unless your program is small enough to fit into that 256KB, it needs to be transferred in from memory. To add salt to the wound, the SPEs cannot directly access RAM; they have to perform a direct memory access (DMA) operation through the SPE's controller to transfer the data, 256KB at a time.

On top of all these issues in a game scenario, the SPEs often had to spend much of their processing time compensating for the PS3's weak, lacklustre and in some cases broken (see: antialiasing) GPU.

That sounds pretty bad, really. In order to fully "tap" the Cell, you have to write code that effectively doesn't change/branch, it has to be straightforward enough to fit within 256KB of memory and it has to be efficiently parallelised to ensure none of the SPEs are waiting for the pipeline to finish. In a game scenario, that is realistically impossible and the peak throughput of the Cell is unattainable, with the final results being significantly less than the brute computational power it is capable of. So maybe you're wondering about scientific calculations - like, you know, all those simulations run on Cell clusters? That could be feasible, right?

Unfortunately, even then, the Cell's design backfires. In theory, this use case is very practical - and indeed, physicians used this setup to simulate black holes among other things, and in terms of theoretical throughput, a cluster of Cells remained one of the top 50 supercomputers for longer than the average supercomputer. So what's the issue?

The problem is, many scientific calculations rely on double-precision floating point calculations due to the sheer amount of numbers involved - in other words, non-integer numbers involving 64-bit calculations - and this slaughters performance on the PS3. In theory, each SPE @ 3.2GHz is capable of 25.6 GFLOPS; when using double precision floating point numbers, each SPE is capable of a pitiful 1.8 GFLOPS (with the entire system capable of 20.8 GFLOPS in total, including the PPE, in these cases). At 25.6GFLOPS, all 8 SPEs would have a combined theoretical maximum throughput of 204.8 GFLOPS; with double precision floating point calculations, 14.4 GFLOPS is the collective total the SPEs can attain in an ideal situation, which is paltry.

To summarise, in a game situation, it is effectively impossible to "fully" utilise the Cell's potential; you would need to write extremely concise and small code that has the absolute minimum amount of branching (if/then/else etc.) and code that parallelises calculations across 7 chips - keeping them all busy and timing operations so as to waste zero time with the pipeline - while also compensating for a weak GPU. Even in a scientific context, the most applicable use for the Cell would be dealing with 32-bit numbers with very efficient code. So in theory, while the Cell is very competent, the usable potential of the chip is a fraction of what it may appear to offer on paper.

Let's get to grips with what the Cell really consists of before we get into the problems. The Cell consisted of one 64-bit, dual-threaded 3.2GHz PowerPC core (the "PPE", or Power Processing Element) and 8 SPEs (Synergistic Processing Elements) - of which 7 are available in-game. Some people misconstrue these SPEs as 8 cores, but this is a misconception - I'll explain why below. The SPEs are optimised for SIMD operations (Single instruction, multiple data - self-explanatory) and have 256KB of local store/SRAM. This doesn't sound so bad; what's the issue?

Well, the SPEs are fatally flawed in a few vital ways. The SPEs are able to talk to each other, but they do not have branch prediction - in other words, self-modifying, changing or "branching" code (such as common "if", "then" or "else" statements) is very problematic, as the PPE has to babysit the SPEs when branching code is in effect. This puts a lot of strain on the single PPC core because along with sending instructions to the GPU and likely doing work itself, it has to manage 7 active SPE units. The Cell is designed to compensate for this with compiler assistance, in which prepare-to-branch instructions are created, but even then it's not a perfect solution because

Let's suppose this isn't an issue and you have very efficient PPE code that cleanly manages this process - time to deal with the next big issue with the Cell: the pipeline. The Cell has a 23-stage pipeline, between the PPE and the SPEs; this is obviously quite a long pipeline to go through every instruction. This problem is exasperated by the fact that while the PPE has some very limited out-of-order execution capabilities (the ability to execute load instructions out-of-order), the SPEs are strictly in-order - that is to say, instead of being able to execute instructions without any fixed order, every instruction must be individually processed in-order. A long, in-order pipeline is a further expense to performance.

So let's assume you have written optimised code that minimises branching operations and has the PPE efficiently babysitting the SPEs. Surely that's not unreasonable?

Still not the end of the problems, unfortunately. The SPEs only have 256KB SRAM each dedicated to them, so unless your program is small enough to fit into that 256KB, it needs to be transferred in from memory. To add salt to the wound, the SPEs cannot directly access RAM; they have to perform a direct memory access (DMA) operation through the SPE's controller to transfer the data, 256KB at a time.

On top of all these issues in a game scenario, the SPEs often had to spend much of their processing time compensating for the PS3's weak, lacklustre and in some cases broken (see: antialiasing) GPU.

That sounds pretty bad, really. In order to fully "tap" the Cell, you have to write code that effectively doesn't change/branch, it has to be straightforward enough to fit within 256KB of memory and it has to be efficiently parallelised to ensure none of the SPEs are waiting for the pipeline to finish. In a game scenario, that is realistically impossible and the peak throughput of the Cell is unattainable, with the final results being significantly less than the brute computational power it is capable of. So maybe you're wondering about scientific calculations - like, you know, all those simulations run on Cell clusters? That could be feasible, right?

Unfortunately, even then, the Cell's design backfires. In theory, this use case is very practical - and indeed, physicians used this setup to simulate black holes among other things, and in terms of theoretical throughput, a cluster of Cells remained one of the top 50 supercomputers for longer than the average supercomputer. So what's the issue?

The problem is, many scientific calculations rely on double-precision floating point calculations due to the sheer amount of numbers involved - in other words, non-integer numbers involving 64-bit calculations - and this slaughters performance on the PS3. In theory, each SPE @ 3.2GHz is capable of 25.6 GFLOPS; when using double precision floating point numbers, each SPE is capable of a pitiful 1.8 GFLOPS (with the entire system capable of 20.8 GFLOPS in total, including the PPE, in these cases). At 25.6GFLOPS, all 8 SPEs would have a combined theoretical maximum throughput of 204.8 GFLOPS; with double precision floating point calculations, 14.4 GFLOPS is the collective total the SPEs can attain in an ideal situation, which is paltry.

To summarise, in a game situation, it is effectively impossible to "fully" utilise the Cell's potential; you would need to write extremely concise and small code that has the absolute minimum amount of branching (if/then/else etc.) and code that parallelises calculations across 7 chips - keeping them all busy and timing operations so as to waste zero time with the pipeline - while also compensating for a weak GPU. Even in a scientific context, the most applicable use for the Cell would be dealing with 32-bit numbers with very efficient code. So in theory, while the Cell is very competent, the usable potential of the chip is a fraction of what it may appear to offer on paper.